How can I access my Buckets?

For essential tasks, such as creating a Bucket, you can use the Hetzner Console. To efficiently manage the files in your Buckets and fully leverage all the features Object Storage offers, you need to use the Hetzner S3 API via an Amazon S3 compatible tool. It is possible to use the API directly, but this is not recommended as you would have to generate the signature yourself, making it quite complicated. Here are your options summarized:

You need an access key and a secret key to use the S3 API. You can create those via Hetzner Console. By default, you can use each access key & secret key pair to access every Bucket within the same project.

- Amazon S3 REST API with Hetzner S3 endpoint

- Tools that support an Amazon S3 compatible API (e.g. S3cmd or MinIO)

- Hetzner Console (only essential tasks)

For detailed instructions on how to create and manage Buckets via an Amazon S3 compatible API tool or the Hetzner Console, navigate to Object Storage » Getting Started in the left menu bar and select your preferred option.

If you set the Bucket visibility to public, you can access your files via the URL in a web browser.

Who can make changes to Buckets?

Via the Hetzner Console, all members and above have permission to make changes to Buckets (add / delete Buckets).

Via the S3 API, basically anyone can upload / delete files — even someone without a Hetzner account.

-

If you set your Bucket visibility to

private, you just need to give anyone who needs access an access key and a secret key. Note that a private Bucket can include public objects if the access permissions are customized accordingly. -

If you set your Bucket visibility to

public, you don't even need to provide access keys and secret keys for read access. Anyone who knows the Bucket URL and the file name can view and download those files as they want (file listing remains denied). Write access (e.g. add files) still requires an access key and a secret key.

How can I delete my Bucket?

Note that you are charged for every hour you have at least one active Bucket (see "How do I cancel my Object Storage?").

To protect data from getting deleted by accident, it is not possible to delete a Bucket that contains data.

Requirements to delete a Bucket:

- Empty — does not contain any data (neither visible nor "invisible")

- "Protection" on Hetzner Console is inactive

To empty a large Bucket, we recommend deleting the data via Lifecycle Policies rather than deleting it manually via a S3 compatible tool. You can find two example lifecycle policies below.

Before you permanently delete all data, run a dry run to confirm which data will be lost.

Note thataws s3 rmdoes not include old versions or incomplete multipart uploads.mc rm --dry-run --recursive --versions <alias_name>/<bucket_name> mc rm --dry-run --recursive --incomplete <alias_name>/<bucket_name> aws s3 rm --dryrun --recursive s3://<bucket_name>To manually delete incomplete multipart uploads via the AWS CLI or S3cmd, see the FAQ entry "How do I delete leftover parts from an aborted multipart upload?".

Below are two examples of a lifecycle policy that deletes all data from a Bucket:

Click here if versioning is disabled

For expiration date, set the current day

YYYY-MM-DD(e.g.2025-12-31) and the current timeT00:00:00Z(e.g.T23:59:00Z)

{

"Rules": [{

"ID": "expiry",

"Status": "Enabled",

"Prefix": "",

"Expiration": {

"Date": YYYY-MM-DDT00:00:00Z

},

"AbortIncompleteMultipartUpload": {

"DaysAfterInitiation": 1

}

}

}This lifecycle policy enforces the following rules:

- Delete all objects at the exact time you set for the expiration date.

- Delete leftover parts from any incomplete multipart uploads that have been in progress for over a day.

Click here if versioning is enabled or suspended

For expiration date, set the current day

YYYY-MM-DD(e.g.2025-12-31) and the current timeT00:00:00Z(e.g.T23:59:00Z)

{

"Rules": [{

"ID": "expiry",

"Status": "Enabled",

"Prefix": "",

"Expiration": {

"Date": YYYY-MM-DDT00:00:00Z

},

"NoncurrentVersionExpiration": {

"NoncurrentDays": 1

},

"AbortIncompleteMultipartUpload": {

"DaysAfterInitiation": 1

}

},

{

"ID": "deletemarker",

"Status": "Enabled",

"Prefix": "",

"Expiration": {

"ExpiredObjectDeleteMarker": true

}

}]

}This lifecycle policy enforces the following rules:

- Convert the latest version of all objects into noncurrent versions and replace them with a delete marker at the exact time you set for the expiration date.

- Delete any versions that have been noncurrent for at least one day.

- Delete all delete markers that are the only remaining version of an object.

- Delete leftover parts from any incomplete multipart uploads that have been in progress for over a day.

As the latest version of an object becomes noncurrent before it is permanently deleted, there is a one-day delay between the expiration date of the latest versions and their complete removal from the Bucket.

How do I upload an entire directory at once?

This depends on the S3-compatible tool you're using, so we recommend reading their documentation. With the MinIO Client, for example, you could use this command:

mc mirror example_directory <alias_name>/<bucket_name>example_directory

├── file1

├── file2

└── file3This will automatically upload file1, file2, and file3 to your Bucket.

Can I edit a file after uploading it to a Bucket?

No, with Object Storage you cannot edit files because objects are immutable. To "update" a file, you need to upload the new version as a new object. If you use the same object name, it will automatically overwrite the existing object.

What Bucket names are allowed?

The name has to be:

- Valid as per RFC 1123 (see "2.1 Host Names and Numbers")

- Not formatted as IP address (e.g.

203.0.113.1) - Unique amongst all Hetzner Object Storage users and across all locations

The name needs to be unique. This means that two different Buckets cannot share the same name, regardless of their location. This rule applies Hetzner-wide, across all locations. If another customer already has a Bucket with the name that you would prefer, you will have to come up with another name.

The Bucket name will be part of the Bucket URL, which is why it has to adhere to the host name requirements. Some of the rules include:

- You can use the alphabet (a-z), digits (0-9), minus sign (-)

- No period (.)

- No blank or space characters

- No upper case characters

- The first character must be an alpha character or a digit

- The last character must not be a minus sign

- Between 3-63 characters are allowed

Note that it is NOT possible to change the name once the Bucket is created.

What object names are allowed?

When you name an object, you should note the following rules:

- Up to 1024 Bytes (equivalent to 1024 US-ASCII characters)

- You can use the alphabet (a-z) and digits (0-9)

- You can use special characters, e.g.

!-.*'(and). - You can use UTF-8 characters. Note that those characters could cause issues.

- You cannot use

adminas the leading prefix. However, usingadminas a subdirectory is allowed.

admin/files/example.txt

files/admin/example.txt

In Buckets, it is not possible to add directories or subdirectories. To get a hierarchical structure, you would need to add / to the object name.

Examples:

website/images/example1.jpgwebsite/images/example2.jpgbackup/snapshot.bakbackup/mysqldump.dmp

How long are Bucket names blocked from being re-used after deletion?

When a Bucket is deleted, the ability to re-use its name depends on the owner of the project:

| Project owner | Description |

|---|---|

| Same project owner | After a Bucket is deleted in a project, you can immediately re-use its name in any project that shares the same project owner — provided you have the necessary permissions to create a new Bucket. |

| Different project owner | 14 days after deletion and provided the name has not been re-used, the name becomes available to everyone. |

Example:

Member(s)

John Doe

Jos Bleau

Max Mustermann

Jos Bleau

Let's assume John Doe deletes a Bucket in Project A. The owner of Project A is Max Mustermann. The Bucket name immediately becomes available for re-use in all projects that also have Max Mustermann as the owner — in the example above Project A and Project B.

- Project A: Max Mustermann and John Doe can immediately re-use the name in this project.

- Project B: Max Mustermann and Jos Bleau can immediately re-use the name in this project.

- Project C: Because the owner is different, no one can re-use the name in this project until the blocking period is over.

Are Buckets moveable between projects?

It is not currently possible to move Buckets from one project to another. If you need to move data between projects, you will need to create a new Bucket in the target project and copy the objects using tools such as rclone or similar.

How do I protect my Bucket from being deleted by accident?

You can protect your Buckets with the property protected on Hetzner Console. The protected property disables deletion. Before you can delete a protected Bucket, you have to first deactivate this property.

In Hetzner Console, protected resources are indicated by a lock icon on the Bucket list view.

How do I protect my objects from getting deleted by accident?

Manually deleting objects by accident is not the only risk. When you upload a new object with the same name as an existing one in the Bucket, the existing object is automatically deleted and replaced by the new object. If you're not careful with your naming scheme, you might end up losing important data by accident.

To protect objects from getting deleted automatically, you can use versioning.

To protect objects from getting deleted manually, you can use object locking. Note that you must enable object locking when you create the Bucket, otherwise you won't be able to use it. To enable object locking during Bucket creation, you have to use an S3-compatible tool as explained in this how-to guide.

This gives you the following options to choose from:

For more information on each option, see these Amazon S3 articles: Versioning, Retention, Legal Hold

manual deletion allowed.

manual deletion disabled.

- Governance Mode

- Compliance Mode

How do I enable object locking?

Object lock needs to be enabled during Bucket creation. It is required to setup legal hold and retention.

It is not possible to enable object lock on a Bucket that was already created without object lock.

-

Hetzner Console

When you create a new Bucket, you can set the status of "Object Lock" to

Enabled.

-

CLI or curl

In the command to create a new Bucket, you need to add a header or flag that enables object locking (e.g.

--object-lock-enabled-for-bucketwith AWS CLI or--with-lockwith the MinIO client).

What is the difference between versioning and object locking?

Versioning allows you to disable automatic deletion of objects. Each object is automatically assigned a version ID, which allows you to keep several versions of the same object in a single Bucket. If you upload an object with a name that already exists in the Bucket (e.g. file_name.txt), the existing object is not deleted; instead, the objects are distinguished via their version IDs. Manual deletion of objects is still possible.

Object Locking allows you to disable manual deletion of selected objects. With object locking, you can choose between the options "legal hold" and "retention". Legal hold protects an object from getting deleted until the legal hold is manually removed again. Retention protects an object from getting deleted until a specified time period has elapsed. Retention has two different modes: "Governance" and "Compliance".

All options in direct comparison:

| Automatic deletion | Manual deletion | Objects with the same name | |

|---|---|---|---|

| Versioning | disabled | allowed | Objects are distinguished via their version ID. |

| Legal Hold | Versioning is automatically enabled and you cannot disable it. | To delete an object, you first have to remove the legal hold. This does not require any special permissions, but it adds an extra step that can help prevent accidental deletion. | Because versioning is automatically enabled, a new object with a different version ID is added. You will need to enable the legal hold again for the new object. |

| Retention (Governance Mode) |

Versioning is automatically enabled and you cannot disable it. | Only users with special permissions can end the retention period earlier and delete the object before the original retention period ended. | Because versioning is automatically enabled, a new object with a different version ID is added. You will need to set retention again for the new object. |

| Retention (Compliance Mode) |

Versioning is automatically enabled and you cannot disable it. | No one can end the retention period earlier and it is not possible to delete the object before the retention period ended. | Because versioning is automatically enabled, a new object with a different version ID is added. You will need to set retention again for the new object. |

Can I delete objects when Object Lock or Versioning is enabled?

Whether or not you can delete an object depends on the features that are enabled. Note that Buckets with Object Lock always have versioning enabled and it is not possible to disable it. With versioning enabled, there are two different ways to delete an object:

-

Delete marker

If you don't specify a version ID, a delete marker with a unique version ID is added as the new latest version and the object is treated as if it was actually deleted.

-

Permanent delete

If you specify a version ID, the object with that specific version ID is deleted irrevocably.

Click here to view an example

In the example below, the following objects get deleted:

- example.txt No version specified

- image.png Version: 4564564

Before

example.txt latest

Version: 1234567

example.txt noncurrent

Version: 8989898

image.png latest

Version: 4564564

image.png noncurrent

Version: 2102102After

example.txt delete-marker

Version: 6565665

example.txt noncurrent

Version: 1234567

example.txt noncurrent

Version: 8989898

image.png latest

Version: 2102102

If you want to delete an object in a Bucket that has Object Lock enabled, check if retention or legal hold are enabled:

Retention

Legal Hold

disabled

disabled

Retention

Legal Hold

disabled

enabled

However, it is possible to add a delete marker.

Retention (Governance)

Legal Hold

enabled

disabled

To delete an object permanently, you need:

- Permissions to bypass governance mode

- A bypass-governance-retention flag / header in the delete request

Retention (Compliance)

Legal Hold

enabled

disabled

However, it is possible to add a delete marker.

Does the visibility setting apply to all objects within a Bucket?

When you set the visibility to public during Bucket creation, we will automatically apply access policies that allow read access to all objects within the Bucket.

When you set up your own access policies, you have the option to exclusively allow read access to objects with a certain prefix (bucket_name/prefix/*) instead of allowing read access to all objects within the Bucket (bucket_name/*).

When you use a client like WinSCP, for example, you might have the option to set access permissions for individual objects. In this case, you could end up with public objects in a Bucket that is marked as "private". To avoid surprises, carefully consider any changes you make to the visibility of individual objects, and document these changes accordingly or track them in another way.

Note, however, that it is not recommended to change the visibility to public after you already added data to your Bucket. If you have to do it, double-check the contents of your Bucket and remove any sensitive data before setting the visibility to public.

The best practice is to only set empty Buckets to public and add data afterwards.

Is it possible to change the visibility of existing Buckets?

Yes, this is possible. You can change the visibility via Hetzner Console or via a S3-compatible tool.

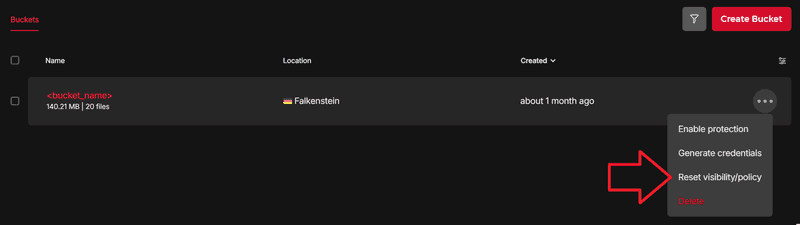

To change the visibility via Hetzner Console, navigate to "Object Storage" and select the three dots next to your Bucket. Next, click on "Reset visibility/policy" and set it to either "Private" or "Public".

The default visibility of every Bucket is "private". To grant access permissions, Object Storage uses access policies. The policies are defined in a JSON file which is applied to the Bucket.

In other words, the access policies automatically overwrite the default visibility, which is always "private".

- If you set the Bucket visibility to "public" during Bucket creation, we create and apply the access policies for you.

- If you set the Bucket visibility to "private" during Bucket creation, no access policies are added.

private

public

How to change the visibility of a Bucket via a S3-compatible tool:

privatetopublic: Add your own access policiespublictoprivate: Delete existing access policies

Instead of going fully private or fully public, you can apply access policies that simply restrict access (for example to certain IPs).

Note: If you restrict access to specific access keys, our frontend will no longer be able to retrieve the objects from this Bucket and list them in Hetzner Console, resulting in an error message.

For more information about policies and available access restrictions, check out the Amazon articles "Policies and permissions in Amazon S3" and "Examples of Amazon S3 bucket policies".

The command to apply the policies depends on the S3-compatible tool you're using, so we recommend reading their documentation (e.g. MinIO Client)

Can I access a private Bucket via a web browser?

If you want to share individual files from a Bucket with someone who doesn't have their own S3 credentials, you can presign the URL to the file with your own S3 credentials and share the resulting URL. With the presigned URL, anyone can download the file via a web browser or tools like curl or wget without having to provide their own S3 credentials. You can set a time for how long this presigned URL should be valid. After that time, you can no longer use the presigned URL to access the file.

The command to sign a URL depends on the S3-compatible tool you're using (e.g. MinIO Client, AWS CLI, rclone). With the MinIO Client, AWS CLI and S3cmd, for example, you could use these commands:

mc share download <alias-name>/<bucket-name>/<file-name> --expire 12h34m56s # hours, minutes, seconds (default 168h = 7 days)

s3cmd signurl s3://<bucket-name>/<file-name> 1765541532 # use https://epochconverter.com/ to convert the time

aws s3 presign s3://<bucket-name>/<file-name> --expires-in 60480 # number of seconds (default 3600 seconds = 1 hour)You can find instruction on how to apply lifecycle policies in the how-to guide "Applying lifecycle policies".

With the MinIO Client, you can automatically create several presigned URLs at once by not specifying a file name, which means you don't have to create those URLs one by one.

Example:

<bucket-name>

├─ example-file-1

└─ example-file-2mc share download <alias-name>/<bucket-name>With the example above, you will get two signed URLs — one for example-file-1 and one for example-file-2.

Can I assign a custom domain name / CNAME to a Bucket?

This functionality is currently not available, but we may add it in the future.

With a CNAME record, the DNS provider solely points the request to the specified Bucket domain. The DNS provider does not and cannot change the request itself. This means, when we receive a request that was pointed to us via a CNAME record, the host header — which specifies the requested domain name — is still the original domain, meaning your own custom domain. Since Object Storage does not know your domain, the request will fail.

However, you can set the host header to the Bucket domain, when you forward the custom domain via your own reverse proxy or via different options:

What are lifecycle policies and how do I use them?

With lifecycle policies, you can:

-

Define a timestamp or a time period after which objects expire (e.g. 30 days after creation). Expired objects are automatically deleted. When you apply lifecycle policies to a Bucket, the rules apply to all objects — existing and new.

-

Define a time period after which "leftover" parts from an aborted multipart upload are automatically deleted. Without this lifecycle rule, these fragments will remain in your Bucket and utilize storage until you remove them.

For a step-by-step guide on how to apply lifecycle policies to a Bucket, you can follow this how-to: "Applying lifecycle policies".

Note that expiry of fully uploaded objects behaves differently, depending on the versioning status:

A delete marker is an empty object (no data associated with it) that has a key (object name) and a version ID. A delete marker is seen as the latest version of an object and it is treated as if the object is deleted.

| Expiration learn more |

Noncurrent Version Expiration learn more |

Expired Object Delete Marker learn more |

|

| No versioning | After an object expired, it is permanently deleted. | n/a | n/a |

| Versioning enabled | Only applies to the latest version of an object. After the latest version of an object expired, it becomes a "noncurrent version". A delete marker with a unique version ID is added as the new latest version and it is treated as if the object was actually deleted. | X days after an object became a "noncurrent version" (replaced by a newer version), it is permanently deleted — unless object lock is applied. | If a delete marker is the only remaining version of an object and all noncurrent versions have been permanently deleted, the delete marker is also deleted. |

| Versioning suspended | Only applies to the latest version of an object. After the latest version of an object expired, it becomes a "noncurrent version". A delete marker with version ID "null" is added as the new latest version and it is treated as if the object was actually deleted. If any of the existing versions already has the version ID "null", this existing object is automatically permanently deleted (see this FAQ .) |

Click here to view an example

Versioning disabled

- Object A

Created: 31 days ago

- Object B

Created: 18 days agoVersioning enabled

- Object C latest

Created: 44 days ago

- Object C noncurrent

Created: 94 days ago

Noncurrent since: 44 days agoVersioning suspended

- Object D delete-marker

Created: 91 days ago

- Object E latest

Created: 31 days ago

Version ID: null

Let's apply the following example lifecycle configuration:

{ "Rules": [{ "ID": "expiry", "Status": "Enabled", "Prefix": "", "Expiration": { "Days": 30 }, "NoncurrentVersionExpiration": { "NoncurrentDays": 15 } }, { "ID": "deletemarker", "Status": "Enabled", "Prefix": "", "Expiration": { "ExpiredObjectDeleteMarker": true } }] }After this lifecycle configuration is applied, the Buckets will look like this:

Versioning disabled

- Object B

Created: 18 days agoVersioning enabled

- Object C delete-marker

Created: 1 day ago

- Object C noncurrent

Created: 44 days ago

Noncurrent since: 1 day agoVersioning suspended

How do I delete leftover parts from an aborted multipart upload?

To prevent leftover parts from accumulating unnoticed and taking up more storage space, you can set up lifecycle policies to delete them automatically. If you prefer more control over which data is deleted, you can manually remove them instead.

-

Automatic deletion via lifecycle policy

In lifecycle policies, you can set

AbortIncompleteMultipartUpload. Its value defines after how many days the "leftover" parts of an aborted multipart upload should automatically get deleted. For more information, see: -

Manual deletion

The commands depend on the S3-compatible tool you're using, so we recommend reading their documentation. With the S3cmd and the AWS CLI, for example, you could use these commands:

-

List ongoing or aborted multipart uploads

Copy the upload ID from the output.

s3cmd multipart s3://<bucket_name> aws s3api list-multipart-uploads --bucket <bucket_name> -

List parts of a multipart upload

s3cmd listmp s3://<bucket_name>/example.zip <upload_id> aws s3api list-parts --bucket <bucket_name> --key example.zip --upload-id <upload_id> -

Delete all parts of an aborted multipart upload

s3cmd abortmp s3://<bucket_name>/example.iso <upload_id> aws s3api abort-multipart-upload --bucket <bucket_name> --key example.zip --upload-id <upload_id>

-

How do I mount a Bucket in a local filesystem?

In general, we recommend sticking with native S3 protocols to avoid latency — and thus performace — issues. If a filesystem is still your preferred method, there are several tools available that provide a filesystem translation layer on top of S3-compatible object storage:

s3fs would also be an option, but we do not recommend using it because it generates a high number of S3 requests (e.g. for metadata queries). This can lead to issues with API request limits.

Can I check the storage and network utilization via API?

No. Currently, we provide only an S3-compatible API for managing Buckets and their data. A dedicated API for Hetzner-specific features — such as generating S3 credentials or checking storage and traffic usage — is not yet available.